Executive Summary

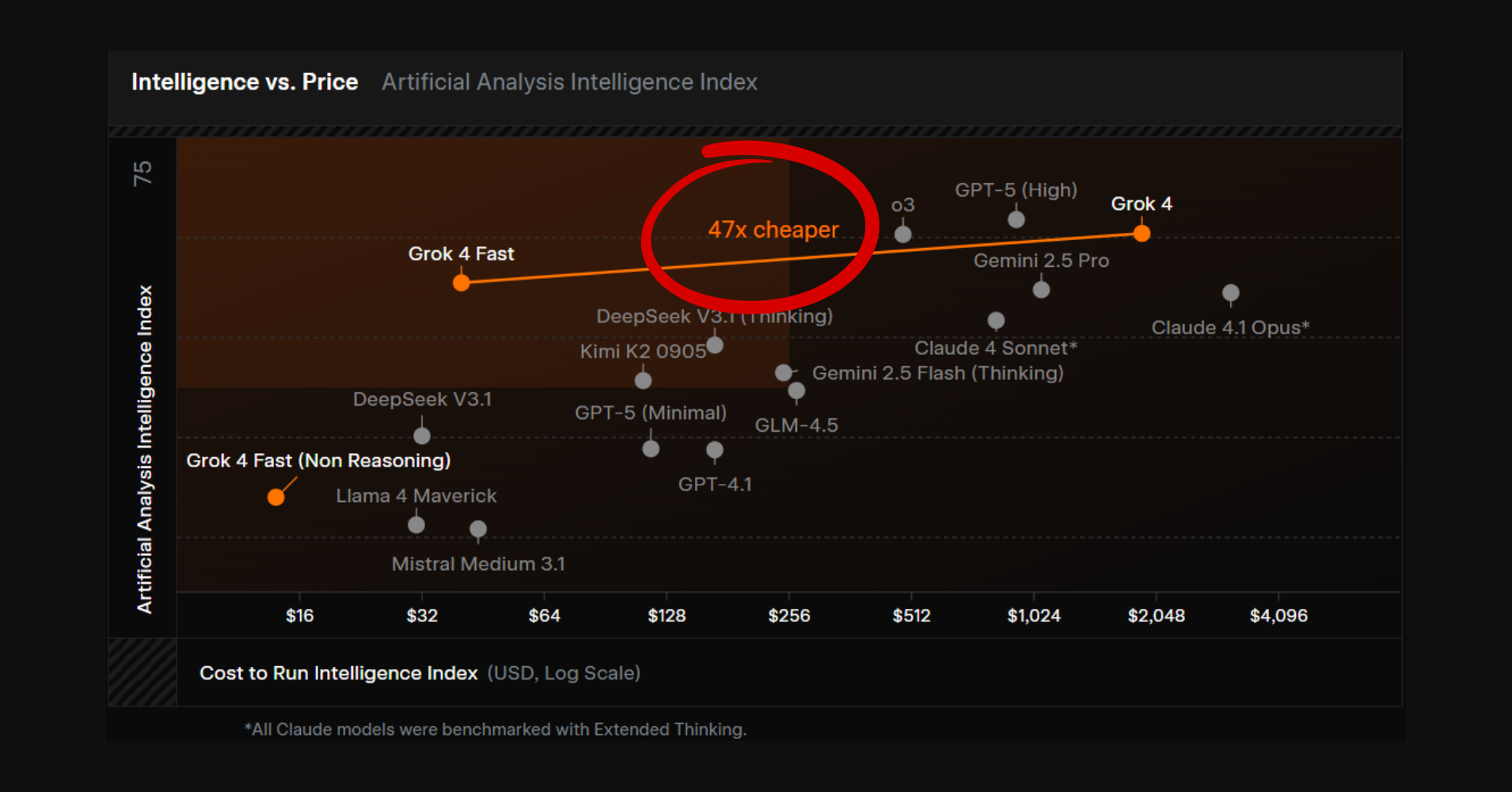

xAI’s Grok 4 Fast makes AI tests and pilots significantly cheaper, while maintaining high quality. It features a huge 2M-token context window and delivers cost savings of up to 47 times more affordable compared to Grok 4.

It uses approximately 40% fewer “thinking” tokens than Grok 4 to achieve similar scores, which can reduce costs by up to 98% for the same benchmark results. Some gateways offer free, limited-time access, allowing teams to try it with little to no budget. This is a significant win for CX leaders who cite pilot costs as a barrier to adoption.

Quick glossary (technical-to-plain-english bridges)

- Token: Think of tokens like tiny word pieces. Models charge based on the number of pieces they read (input) and write (output).

- Context window: How much info the model can consider at once. 2M tokens = room for very long call logs, policies, and multiple files together.

- Caching: Re-using the same input without paying full price again—like re-playing a song you already downloaded.

- Unified model: One model that can answer fast and also “think deeply,” so you don’t need to switch models mid-test.

- Benchmark: A standard test to compare model quality—like a scorecard.

What xAI shipped (the numbers that matter)

- 2M-token context window: You can load long call logs, full guides, or many files at once. Great for real-world tests.

- One unified model: It handles quick replies and deeper reasoning. No swapping models or extra setup.

- Token pricing (API):

- Under 128k context: $0.20 per 1M input, $0.50 per 1M output

- Cached inputs: $0.05 per 1M

- 128k or more: 0.40 per 1M input, 1.00 per 1M output

- Efficiency gains: About 40% fewer thinking tokens than Grok 4 to reach similar quality. Up to 98% cheaper to hit the identical benchmark scores.

- Availability: Short-term free access via some gateways (e.g., OpenRouter, Vercel AI Gateway), plus the standard xAI API.

- Third-party tracking: External trackers note a strong price-to-intelligence ratio and a blended price of approximately $0.28 per 1 million tokens (assuming a 3:1 input-to-output ratio).

Why is this a breakthrough for pilots

- Lower budgets: Long, realistic tests were once expensive. Now, lower token prices, combined with fewer “thinking” tokens, reduce total spend.

- Realistic trials: The 2M context allows you to load entire policies, associated knowledge base articles relevant, and lengthy calls, without requiring heavy splitting. You test what you’ll actually run.

- Faster loops: A model that provides both quick answers and reasoning results in simpler builds and more rapid changes during trials.

Pilot math (simple example)

- Example: You test an Agent Assist pilot with 7,000 input tokens and 1,200 output tokens.

- 8–10 assist turns per call

- ~650–900 input tokens per turn (prompt + transcript slice + KB snippet)

- ~100–120 output tokens per turn (short, useful guidance)

- Cost ≈ (7,000 × 0.20)+(1,200×0.50) = $0.0020 per call

- If your pilot contains 500 calls, that's $1

- If you rerun with the same input, caching can reduce the input cost to $0.05 per 1 million. In other words, having standard prompts for specific actions can further reduce costs.

- 8–10 assist turns per call

- Result: You can try more versions (prompts, tools, rules) within the same budget that previously covered only one narrow test.

- Note: If any single request exceeds 128k tokens, that request would use the higher-tier pricing

A simple way to validate this in your environment (no guesswork)

- Step 1: Pick 10 real calls that truly need assistance (short, average, and complex)

- Step 2: For each call, simulate 8–10 assist turns:

- Include a 200–300 token prompt

- Include a 250–500 token transcript slice (latest exchange)

- Include a 200–400 token KB chunk

- Step 3: Log tokens automatically from your LLM gateway or SDK (it will report input/output tokens per request)

- Step 4: Average the 10 calls; you’ll get your real per-call input/output footprint in a day

- Step 5: Multiply by daily/weekly volumes for your team to build accurate cost forecasts

High-impact pilot ideas for contact centres

- Agent Assist: Real-time help with policies and troubleshooting, with low token use per turn.

- Knowledge synthesis: Turn long SOPs and KBs into clear, ready answers; measure accuracy and coverage.

- Search-augmented answers: Test live web/X search for recalls, outages, or policy updates at pilot-friendly cost.

Procurement and governance notes

- Data handling: If you use free gateways, read data-use policies. For strict data rules, use the xAI API and/or synthetic/redacted data in tests.

- Cost controls: Run own estimates first to get cost baselines. Set token budgets, utilise caching for repetitive tasks, and adjust test windows to ensure costs remain predictable.

- Fair comparisons: When you compare to older pilots, use the same datasets and metrics. Include thinking tokens and context size in your cost math.

Bottom line

Thanks to Grok 4 Fast, cost is no longer a barrier for piloting AI in your contact centre. You can now run high-quality, real-world tests—quickly and affordably—making it easier to discover what really works for your teams and customers.

More details available on official release by xAI: https://x.ai/news/grok-4-fast

![Werner [1010]](/content/images/2025/08/LI-Profile-Picture--4--1-1.png)